Category:

AI Product Concept

Created For:

Conceptual Prototype

An experimental mobile concept for mood-driven music generation, blending AI conversation, audio personalization, and emotional wellbeing.

Goal

Design an AI-powered music experience that makes users feel emotionally seen—one that truly vibes with you, all the time. Instead of picking from playlists or genres, users simply chat with MELO to generate beats based on their feelings, situations, or ambient context.

Design Vision

This concept reimagines the traditional music app into a therapeutic, conversational companion—a space where music is not just consumed but co-created. The user tells MELO how they’re feeling, and MELO replies with a custom lofi track tailored to that moment. The result is deeply personal and refreshingly intuitive.

This idea was born from a simple frustration: no playlist ever fully gets you. So what if you could just ask for one that does?

Key Features & Tools

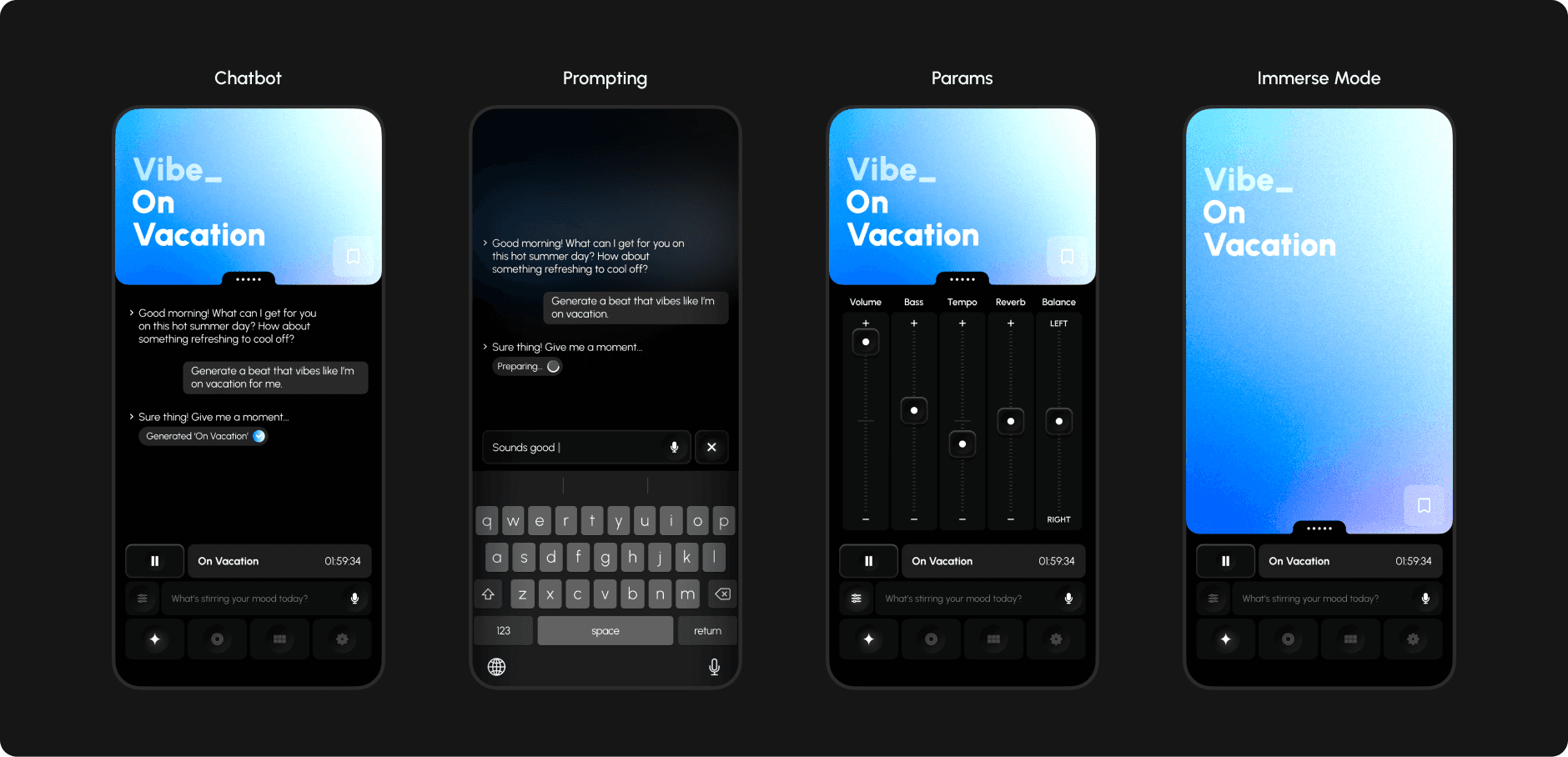

Conversational Music Generation

Users describe how they feel (e.g. “Give me something that feels like I’m on vacation”)—and MELO responds with a unique beat and name. Everything starts with a chat.

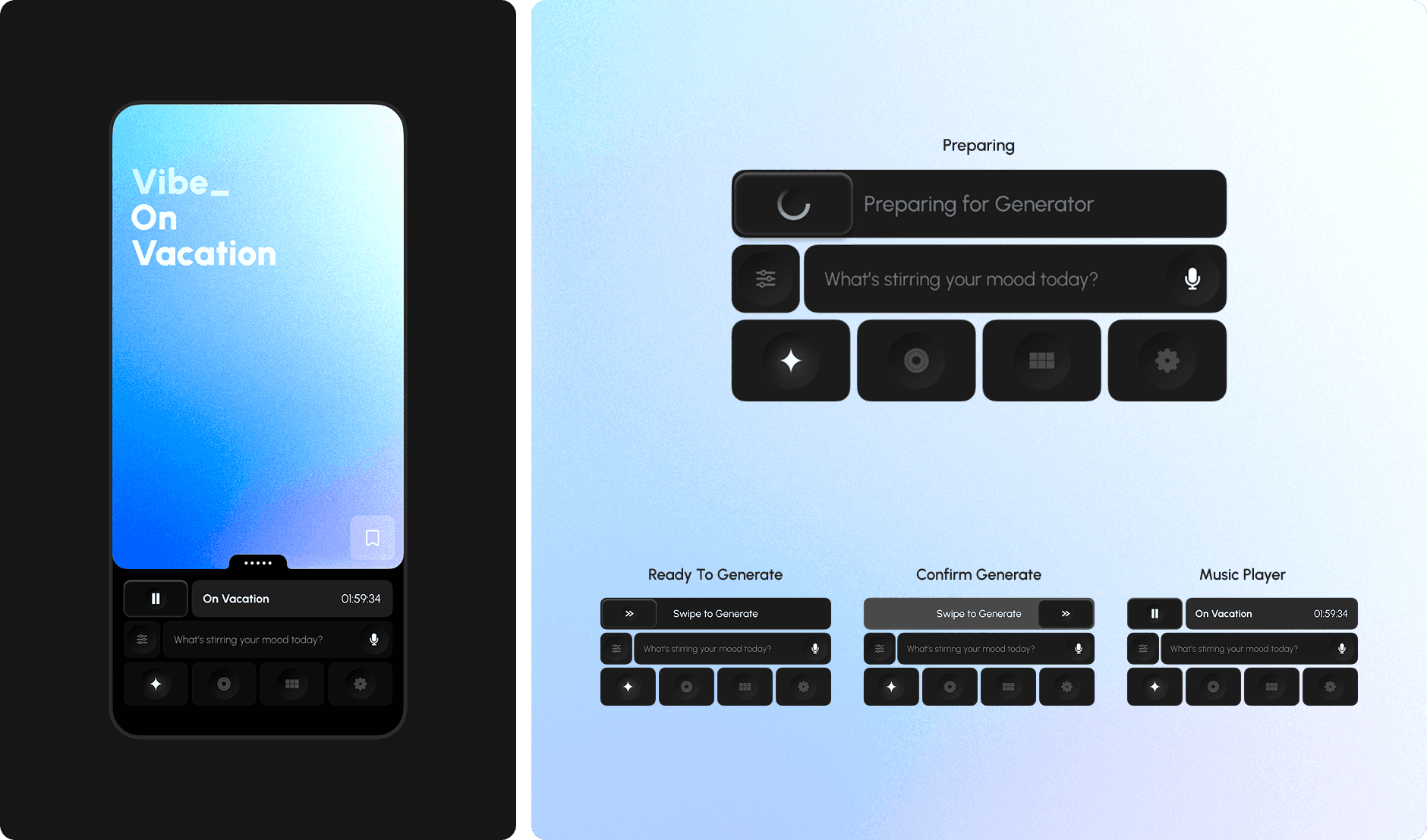

Utility Bar with Smart States

The home screen includes a utility bar with four adaptive states:Prompting → 2. Generating → 3. Playing → 4. Immersing

Each visual state reflects where you are in the experience.

Beat Customization Panel

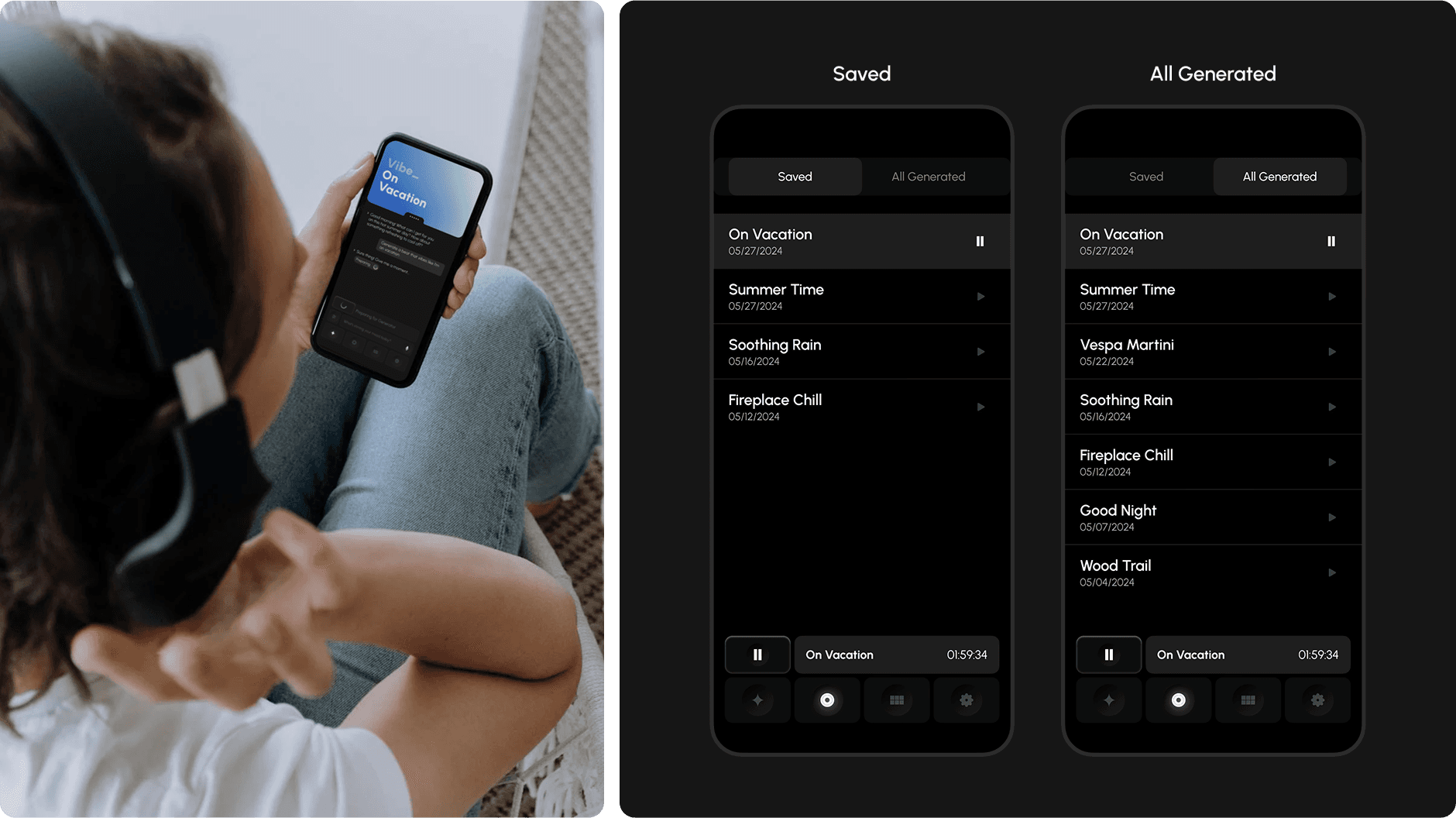

A simple, DJ-style slider lets users fine-tune volume, tempo, bass, reverb, balance—for deeper control, without breaking flow.Saved Tracks & Mood History

All generated beats are logged and saved. Users can access their own sonic archive via the “Saved” and “All Generated” tabs.

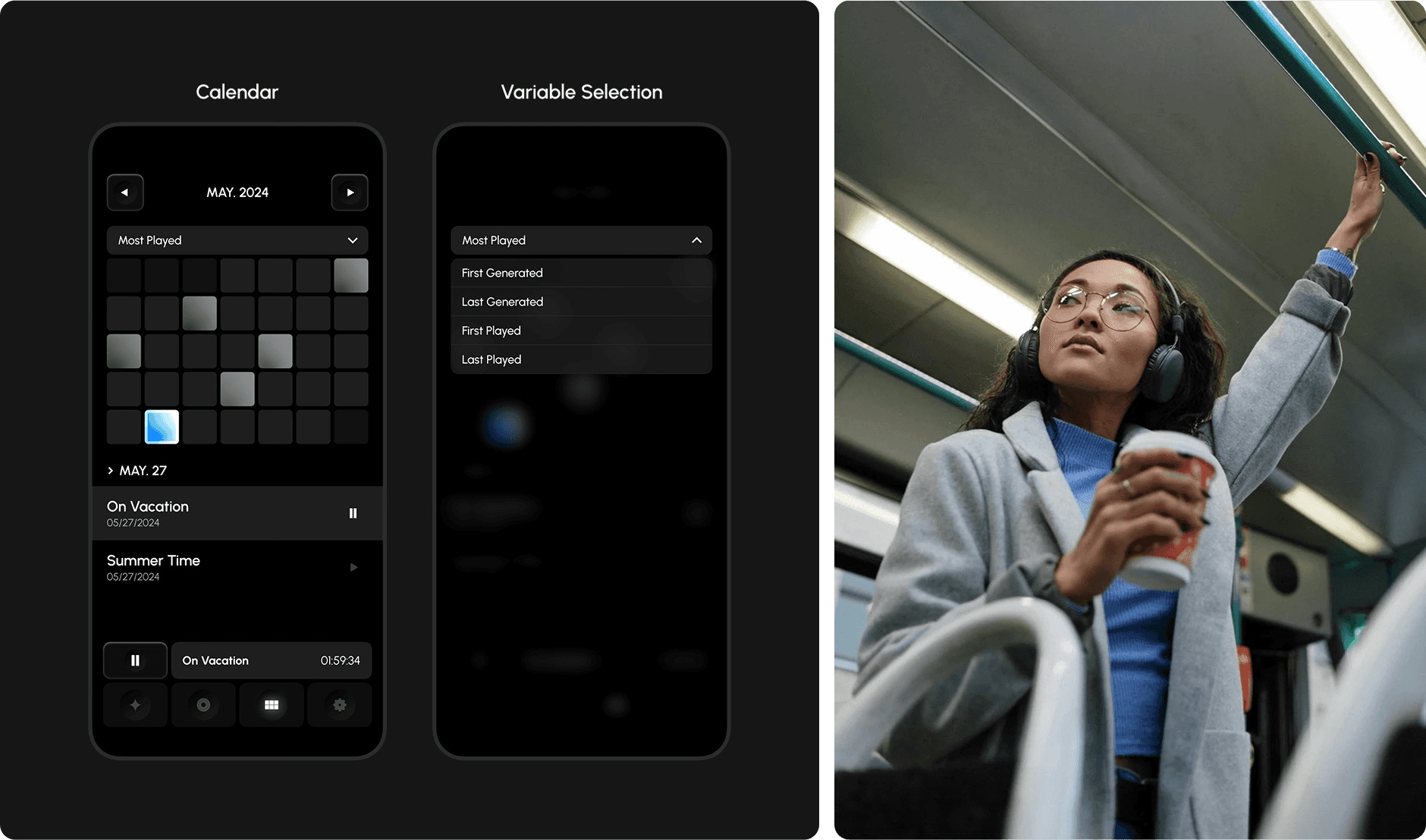

Mood Calendar with Variable Filters

Tracks are automatically recorded in a mood calendar, where users can see what they listened to, when, and why.

Variables like most played, first generated, last played allow emotional trends to emerge.

Minimal Immersive Mode

Once a beat is playing, users can switch to a clean full-screen mode that removes distractions and lets the music lead.

Content & Component Architecture

This app was structured around a scalable modular system, designed with flexibility in mind.

Key screen categories include:

Home: chat, generation, customization

Saved: access previously generated tracks

Calendar: emotional journaling through music

Settings: account & permissions

Each screen is built using reusable sub-components for adaptability across screen sizes.

Why This Design?

Music is emotional—but most music apps aren’t.

They categorize by genre or algorithm, but they don’t talk to you. MELO changes that.

This project explores what happens when we use conversation as a design interface, turning mood into sound and sound into memory.

It was also a playground for experimenting with:

Micro-interaction states

Modular architecture

Natural language prompting in a non-ecommerce context

My Role

I led this project independently as a self-initiated design challenge.

Responsibilities included:

UX strategy & user flows

Visual design & prototyping

Component mapping & UI system

Microcopy & tone-of-voice definition

Concept validation through desk research

What’s Next

MELO remains an experimental prototype—not yet built, but full of potential.

Its core idea—mood-driven, chat-based creativity—is something I’d love to explore further in collaborations or real-world builds.